As a further follow-up to the post below, see also Sam Altman from OpenAI claiming that the Age of Giant AI Models is already over and Greg Brockman’s statement that GPT 5 will be released ‘Incrementally’. Although this will in no way damper the tremendous applications of Generative AI and AI more broadly along with other smart technology, it helps to get a more realistic picture of AI advancement and research.

Apart from new AI model designs, or architectures, and further tuning based on human feedback that are promising directions that many AI researchers are already exploring, it is also clear that significant new breakthroughs will be required as also discussed in my book “Democratizing Artificial Intelligence to Benefit Everyone: Shaping a Better Future in the Smart Technology Era” (jacquesludik.com). See also Yann LeCun’s and others perspective on this.

OpenAI’s CEO Says the Age of Giant AI Models Is Already Over

https://lnkd.in/dZPGCPtK

GPT 5 Will be Released ‘Incrementally’ — 5 Points from Brockman Statement [plus Timelines & Safety]

https://lnkd.in/dedGQVBJ

#ai#technology#future#safety#artificialintelligence

SwissCognitive, World-Leading AI Network, Machine Intelligence Institute of Africa | MIIA, Cortex Logic, Cortex Group, DSNai – Data Science Nigeria, AICE Africa, DeepMind, Google, Meta AI, Microsoft, Amazon Web Services (AWS), Stanford Institute for Human-Centered Artificial Intelligence (HAI), World Economic Forum, OECD.AI, VERCHOOL HOLDINGS, Blockchain Company

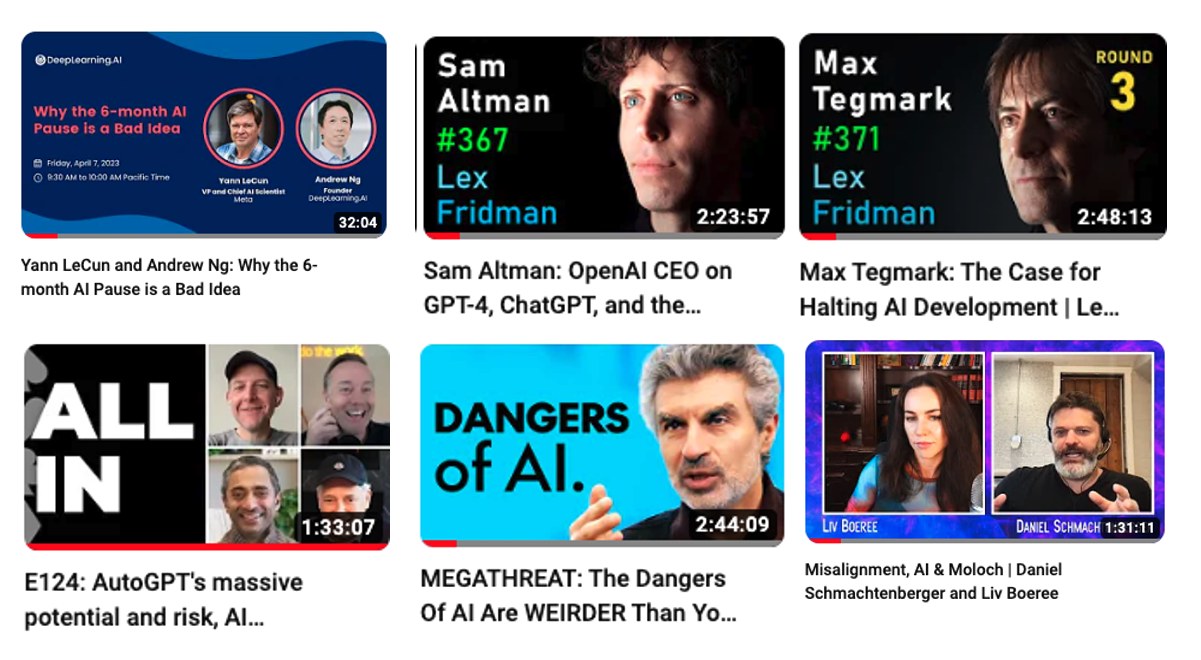

As part of further sense-making on this hotly debated #AI topic and get a more balanced perspective, see also the following videos (in addition to my post: https://lnkd.in/dEDi994V):

Lex Fridman‘s interview with Max Tegmark “The Case for Halting AI Development”

https://lnkd.in/dJRAcsip

Yann LeCun and Andrew Ng: “Why the 6-month AI Pause is a Bad Idea”

https://lnkd.in/dJPdbAZZ

Sam Altman, OpenAI’s CEO confirms the company isn’t training GPT-5 and ‘won’t for some time’

https://lnkd.in/dYxHzrsw

https://lnkd.in/dWkRrxU7

Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI | Lex Fridman Podcast

https://lnkd.in/d2WQ_ADS

All-In Podcast (Jason Calacanis, Chamath Palihapitiya, David O. Sacks. David Friedberg on AutoGPT’s massive potential and risk, AI regulation

https://lnkd.in/dNKHx3r4

Tom Bilyea’s Impact Theory MEGATHREAT: The Dangers Of AI Are WEIRDER Than You Think | Yoshua Bengio

https://lnkd.in/d7i_Wruu

Misalignment, AI & Moloch | Daniel Schmachtenberger and Liv Boeree

https://lnkd.in/dUDdu_kX

See also more on the future of AI in the book “Democratizing Artificial Intelligence to Benefit Everyone: Shaping a Better Future in the Smart Technology Era.” Jacquesludik.com

See also the Democratizing AI Newsletter: https://lnkd.in/eK8AxHG2

SwissCognitive, World-Leading AI Network, Machine Intelligence Institute of Africa | MIIA, DSNai – Data Science Nigeria, AICE Africa, Joscha Bach, OECD.AI, Google, DeepMind, Meta AI, DeepLearning.AI, Future of Life Institute (FLI), Stanford Institute for Human-Centered Artificial Intelligence (HAI), Cortex Logic, VERCHOOL HOLDINGS

#artificialintelligence#technology#datascience#future#podcast#deeplearning#google

We need wisdom to make proper sense of what we should do with AI innovation and its impact on society and the world at large. As part of the sense-making process, I highly recommend also listening to Yann LeCun and Andrew Ng‘s conversation this Friday to get their perspectives on this topic of a 6-months AI pause.

I ran a similar poll (https://lnkd.in/ddU__4zY) to that of others with seemingly similar outcomes where most people seems to not want to have the pause as proposed by the Open Letter via Future of Life Institute (FLI). As also communicated in my book “Democratizing AI to Benefit Everyone: Shaping a Better Future in the Smart Technology Era” (jacquesludik.com) and https://lnkd.in/eK8AxHG2, not only do I fully understand and empathise with the Open Letter, FLI as well as your perspective, I specifically outlined the AI risks around human agency, data abuse, job loss, dependence lock-in and mayhem, but also emphasised specific solutions such as “Improve human collaboration across borders and stakeholder groups”, “Develop policies to assure that development of AI will be directed at augmenting humans and the common good”, and “Shift the priorities of economic, political and education systems to empower individuals to stay ahead in the ‘race with the robots’”.

That said, as part of sense-making on where we are with GPT-4 (we are still far away from AGI and need some substantial breakthroughs that I can elaborate on – see also Yann LeCun‘s views on this), one of the problems comes in with the accessibility of these narrow AI applications to the general public that are already having potential unintended consequences (see also the Open Letter) similar (and potentially worse) to the ones that we already saw with social media (the good and the bad) and the internet more broadly.

As we have seen on Twitter we have very diverse opinions from many thought leaders on both sides of the isle. From FLI, Max Tegmark, Yoshua Bengio and Elon Musk supporting the 6-months moratorium (Max also saying that “rushing toward prematurely is IMHO likely to kill democracy”) to Andrew Ng calling the pause a “terrible idea”and asking that we balance the huge value AI is creating vs realistic risks, Yann LeCun and Joscha Bach comparing the present wave of generative AI to that of the “printing press”, and Pedro Domingos jokingly tweeting that “the AI moratorium letter was an April Fools’ joke that came out a few days early due to a glitch” and “we must put a 6-month moratorium on SpaceX launches to avoid overpopulating Mars.” Joscha also mentions that “humanity in its present form is a radically misaligned super intelligence that is going to get everyone killed if it does not become coherent.” I tend to agree with this – that is why I have proposed very specific solutions in my book “Democratizing AI to Benefit Everyone” (jacquesludik.com) and an MTP for Humanity and its associated goals.

As part of further sense-making on this hotly debated hashtag#AI topic and get a more balanced perspective, see also the following videos:

Lex Fridman‘s interview with Max Tegmark “The Case for Halting AI Development”

https://www.youtube.com/watch?v=VcVfceTsD0A

Yann LeCun and Andrew Ng: “Why the 6-month AI Pause is a Bad Idea”

https://www.youtube.com/watch?v=BY9KV8uCtj4

Sam Altman, OpenAI’s CEO confirms the company isn’t training GPT-5 and ‘won’t for some time’

https://www.theverge.com/2023/4/14/23683084/openai-gpt-5-rumors-training-sam-altman

https://youtu.be/4ykiaR2hMqA

All-In Podcast (Jason Calacanis, Chamath Palihapitiya, David O. Sacks. David Friedberg) on AutoGPT’s massive potential and risk, AI regulation

https://youtu.be/i1gMhEUXeNk

See also more on the future of AI in the book “Democratizing Artificial Intelligence to Benefit Everyone: Shaping a Better Future in the Smart Technology Era.” Jacquesludik.com

hashtag#artificialintelligence SwissCognitive, World-Leading AI Network, Machine Intelligence Institute of Africa | MIIA, DSNai – Data Science Nigeria, AICE Africa, OECD.AI, Google, DeepMind, Meta AI, DeepLearning.AI, Future of Life Institute (FLI), Stanford Institute for Human-Centered Artificial Intelligence (HAI)